My original guide on how to help fix screen-tearing on Linux with an NVIDIA GPU is a bit dated, so here’s an even easier way.

Notes

You will likely need the 375.26 driver or newer for this to show up in "nvidia-settings".

These options may cause a loss in performance. For me personally, the loss is next to nothing.

It probably won't work with Optimus right now, but this may be fixed in future.

What to do

Previously you needed to edit config files, and it was a little messy. Thankfully, NVIDIA added options in nvidia-settings to essentially do it all for you. The options were added in a more recent NVIDIA driver version, so be sure you're up to date.

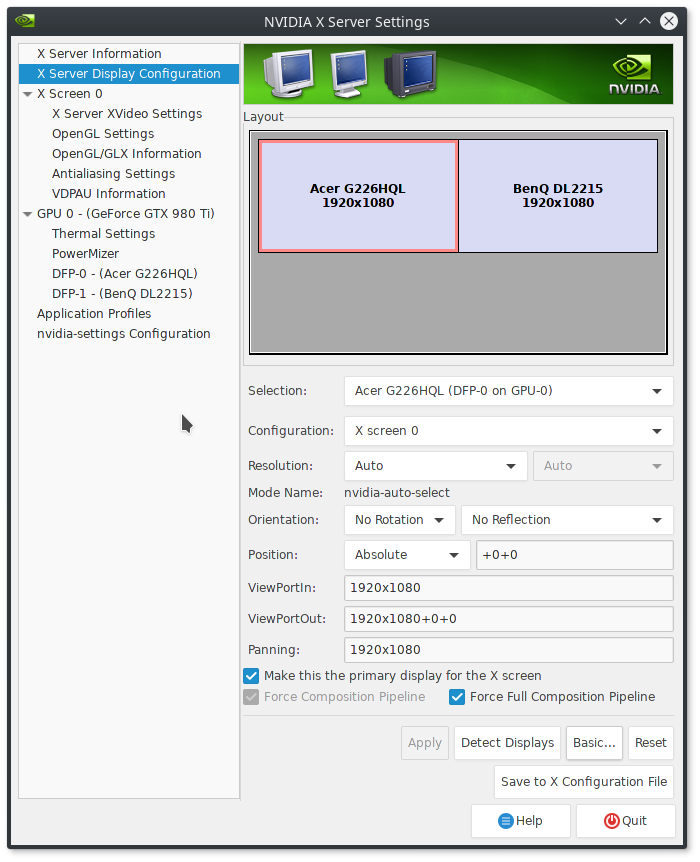

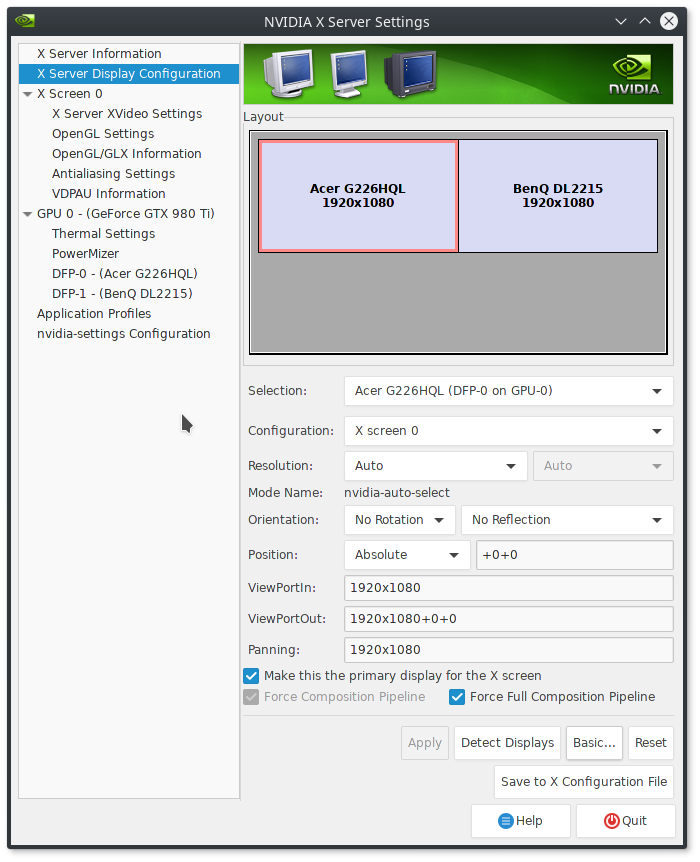

Load "nvidia-settings" and you will need to go to this screen and then hit “Advanced” at the bottom (my screenshot doesn't have the button, as this is what you see after you hit it):

Tick the boxes for “Force Composition Pipeline” and “Force Full Composition Pipeline” and then hit "Apply".

You can then enjoy a tear-free experience on Linux with an NVIDIA GPU. It really is that damn easy now.

Note: You will likely need to run nvidia-settings with “sudo” for the below to work.

If you want this applied all the time on startup (without needing to do anything), you can hit “Save to X Configuration File”. I have mine located at “/etc/X11/xorg.conf.d/xorg.conf” on Antergos, but your location may be different. I also recommend backing any existing xorg.conf file up if one exists.

This step isn't needed, but it's a fun and useful extra!

I also have mine set to a script and then to a keyboard shortcut, for those times when a game reverts the desktop to a low resolution after exiting, or when a game turns off a second monitor, this will turn it back on.

For that I manually set the resolution like so:

Edit that for your details, like your resolution and monitor connections (you can see them by running "xrandr --query" in terminal), and then save it as an easy to remember filename. You can then set it as a custom shortcut, I use “CTRL+ALT+F4” as it’s not used for anything else.

This has been tested and works for me perfectly across Ubuntu Unity, Ubuntu MATE and Antergos KDE.

Notes

You will likely need the 375.26 driver or newer for this to show up in "nvidia-settings".

These options may cause a loss in performance. For me personally, the loss is next to nothing.

It probably won't work with Optimus right now, but this may be fixed in future.

What to do

Previously you needed to edit config files, and it was a little messy. Thankfully, NVIDIA added options in nvidia-settings to essentially do it all for you. The options were added in a more recent NVIDIA driver version, so be sure you're up to date.

Load "nvidia-settings" and you will need to go to this screen and then hit “Advanced” at the bottom (my screenshot doesn't have the button, as this is what you see after you hit it):

Tick the boxes for “Force Composition Pipeline” and “Force Full Composition Pipeline” and then hit "Apply".

You can then enjoy a tear-free experience on Linux with an NVIDIA GPU. It really is that damn easy now.

Note: You will likely need to run nvidia-settings with “sudo” for the below to work.

If you want this applied all the time on startup (without needing to do anything), you can hit “Save to X Configuration File”. I have mine located at “/etc/X11/xorg.conf.d/xorg.conf” on Antergos, but your location may be different. I also recommend backing any existing xorg.conf file up if one exists.

This step isn't needed, but it's a fun and useful extra!

I also have mine set to a script and then to a keyboard shortcut, for those times when a game reverts the desktop to a low resolution after exiting, or when a game turns off a second monitor, this will turn it back on.

For that I manually set the resolution like so:

nvidia-settings --assign CurrentMetaMode="DVI-I-1:1920x1080_60 +0+0 { ForceFullCompositionPipeline = On }, HDMI-0:1920x1080_60 +1920+0 { ForceFullCompositionPipeline = On }"Edit that for your details, like your resolution and monitor connections (you can see them by running "xrandr --query" in terminal), and then save it as an easy to remember filename. You can then set it as a custom shortcut, I use “CTRL+ALT+F4” as it’s not used for anything else.

This has been tested and works for me perfectly across Ubuntu Unity, Ubuntu MATE and Antergos KDE.

Some you may have missed, popular articles from the last month:

sadly dying light still give me motion sickness; no tearing though xD

0 Likes

Quoting: yzmoSomehow none of those checkboxes show up for me... :(What driver version, what GPU, is it optimus?

0 Likes

Quoting: liamdaweI'm running 375.26, no optimus. Perhaps it only works if multiple monitors are present as nvidia treats it as a per-monitor setting?Quoting: yzmoSomehow none of those checkboxes show up for me... :(What driver version, what GPU, is it optimus?

0 Likes

Quoting: yzmoNo, it works with any amount of monitors.Quoting: liamdaweI'm running 375.26, no optimus. Perhaps it only works if multiple monitors are present as nvidia treats it as a per-monitor setting?Quoting: yzmoSomehow none of those checkboxes show up for me... :(What driver version, what GPU, is it optimus?

Send a screenshot?

0 Likes

Here:

0 Likes

What version of nvidia-settings do you have?

0 Likes

QuoteWhat version of nvidia-settings do you have?I always thought it was connected to the driver version, as it seems to install with the driver. Or is it that NV-CONTROL version?

Last edited by yzmo on 11 January 2017 at 10:53 pm UTC

0 Likes

nvidia-settings is its own package and is updated along with driver versions usually, what does you package manager say its version is?

0 Likes

Hi,

I have been struggling to remove tearing on my laptop with a GTX960m.

Has anyone tested the latest release for xorg-server?

Xorg-Server-1.19.1-Released

Last edited by lukacl on 12 January 2017 at 12:58 am UTC

I have been struggling to remove tearing on my laptop with a GTX960m.

Has anyone tested the latest release for xorg-server?

Xorg-Server-1.19.1-Released

Last edited by lukacl on 12 January 2017 at 12:58 am UTC

0 Likes

Okay, it seems like specifically upgrading that package worked. Somehow the package manager hadn't realised there was an update available. The boxes are now there. Thank you so much for the help. :)

1 Likes, Who?

See more from me