With the increasing amount of games using AI, Epic Game's Tim Sweeney believes the AI messages on stores like Steam make no sense.

Currently, if a game that's released on Steam uses generative AI in some way, it needs to be disclosed. Developers have to go through a content survey on their games to detail things like mature content and AI use. From Valve's own public rules on it as a reminder first:

If your game used AI services during development or incorporates AI services as a part of the product, this section will require you to describe that implementation in detail:

- Pre-Generated: Any kind of content (art/code/sound/etc) created with the help of AI tools during development. Under the Steam Distribution Agreement, you promise Valve that your game will not include illegal or infringing content, and that your game will be consistent with your marketing materials. In our prerelease review, we will evaluate the output of AI generated content in your game the same way we evaluate all non-AI content - including a check that your game meets those promises.

- Live-Generated: Any kind of content created with the help of AI tools while the game is running. In addition to following the same rules as Pre-Generated AI content, this comes with an additional requirement - in the Content Survey, you'll need to tell us what kind of guardrails you're putting on your AI to ensure it's not generating illegal content.

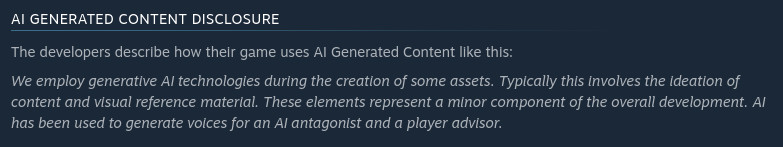

You've probably seen the notices buried at the bottom of Steam store pages, like this one for Stellaris from Paradox Interactive:

Which you can make much bigger and clearer so you don't miss them with a browser plugin covered here on GamingOnLinux recently.

Jumping into the discussion now is Epic Game's CEO Tim Sweeney, posting a reply to someone on X (Twitter):

It's no surprise that Sweeney is in favour of it, with games like Fortnite previously using AI like being able to chat with Darth Vader.

The situation with generative AI is evolving constantly, but even Sweeney here notes there are rights issues. To be more specific: a lot of the generative AI models used everywhere are trained on material without the permission of the original author of various works. There's been many lawsuits on it to the point that I don't think I need to rehash any of that here - they're all reported on constantly in depth elsewhere.

Even if it does end up everywhere, it's still a good thing to know how it's being used - isn't it? It's a discussion that swings wildly between camps. AI slop makers and people in favour of AI generation will naturally not want these types of notices and will fight against them, while the other side no doubt value seeing the notice to make more informed purchasing decisions.

One thing is for sure - generative AI is complicating everything and companies are repeatedly enshittifying their products with generative AI, and it's going to continue on for some time.

What are your thoughts on the AI disclosures? Leave a comment.

Quoting: MayeulCThe irony. An AI tag being relevant for art exhibits, but not videogames? Are videogames not art, then? It is telling how Tim thinks about games. But then, id software games do not have a particularly high artistic value (subjectively, of course).Just FYI: Sweeney is the Epic / Unreal guy, not ID.

Quoting: Mountain ManIn truth, in most cases, I won't want to play it, but in the cases that I do if I can harm the business more from playing the game without paying, I will do so. Whether it's dumb or not.Quoting: ArehandoroIf a game uses AI models that have been trained on material without the content of their authors (Spoiler, all of them) I consider that game to be built illegally, and as such, I will obtain it and play it via "illegal" methods.That is one of the dumber excuses for piracy I've come across. If a game uses generative AI, but you still want to play it, then pay your money and play it legally. Otherwise, stand on principle and don't play it at all.

Quoting: Technopeasant@scaineSorry, I wasn't clear. It's just as important. I just don't think that anyone other than the FSF would have the motivation to bring a case against the AI companies for code. It's also, as Kimyrielle points out, really hard to prove that AI was used in any given sample of code. It should still be disclosed, imo. It's still burning the planet and (probably) affecting jobs due to short-sighted, or over-optmistic managers.

Why is code generation trivial while "art, music and voice acting" is inherantly important?

Quoting: KimyrielleThing is that these tools DO make you more productive, if you're using them right (which is why they're getting adopted by coders).As I said, this is currently not the case. I'm sure there will be further studies on this, but right now, this study from July this year points to the opposite. While you think AI will make you more productive, in fact you are less so. It's a really interesting read.

https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/

While you think AI will make you more productive, in fact you are less so.Vibe coding does benefit one class of programmer — contractors brought in to fix what the vibe coders screwed up.

Instead, I want to push back on some of the sentiment and talk to the topic of productivity. This idea that we should just bend over for the inevitable is a really sad mindset and reflects too well the pitfalls of the modern world. The reality here is that Tim made that comment to get people fighting with each other instead. Better the population fight about disclosure than the real issues at hand.

I work in Enterprise Software actively producing code, so I wanted to talk about that side of stuff. I am glad that a study was cited here, I will be interested to see how well my rough assessment of reality lines up with their findings. What I mean here is that I do not understand how a person could consider generating complex code from one of these models more productive. Furthermore, it cuts out the part of the process where you learn and grow and is a major issue in code development. I cannot speak on drawing or painting, but I am going to assume the same rings true there.

To break down what I am saying a bit further let's start with the productivity side of things. Integrated Development Environments (IDE)s have been generating code for a long time. However, the ways that they do so are usually finishing lines or generating common methods. I use these features regularly because typing "return true" as "re" pressing enter, then "t" enter is a productivity gain. The keystroke count goes down, but I can, at a glance, see it does what I need it to. I might also generate the shell of a unit test class which is basically a whole bunch of methods that I can then go through and fill out. Same with basic getters and setters which are the methods that manipulate object variables. The point is, I do not need to write "public String getName()" for every instance I have a string variable called name, and I can validate that "return this.name" is what I need in an instant.

There are a whole lot of powerful tools in IDEs that speed up development and produce faster and better code as you get more and more familiar with using them. So, then, why would I disagree that generative AI would produce faster/better code? Well the answer is that the more complex the code being generated gets the less likely I am to catch where it isn't doing what I want it to at a glance. "return true" is very easy to verify in an instant, where a full logical loop with multiple return values is not. This means I need to review and verify the results and in many cases writing the code from scratch would just be faster.

This is also just the first part of the process because as you iterate over that bit of code and implement it there is a lot of value in understanding how it works. This is part of why code readability is such a huge part of working with a team. So, if I generate some code and find it does not work later, I have to spend time breaking the generated code down ever further before I can write the fix. If I had written that code myself I would have an intrinsic understanding as I had to reason out the logic to do so. My point being that the actual process of writing the code is a very important part of making quality software and directly translates to productivity and speed within a code base.

The second point I made is that by skipping the steps where you write the code you lose valuable understanding and is a problem in general in the development space. I run into people regularly that do not understand the code they produce. This may be from looking it up and blindly copying a stack overflow answer or, now, generating it by a process that was trained on those answers. Either case results in the same thing. They never allow questions to become answers, they just have results. I have said for a long time that my biggest issue with maths classes when I was growing up were that you are taught that "The answer is the only thing that mattered and those can be found at the back of the book". When I was younger I never really grasped how accurate this frustration was until I started working on code with people that simply produced results.

You see, the sentiment I felt was that the process to get from 1+1=2 didn't matter, and that all you had to do was memorize what symbols did and the steps for stuff like long division. However this is a very flawed way to look at maths because what actually matters is understanding WHY 1+1=2 and how that process works. Having a rich understanding of why allows you to apply the concepts in broader and more complex ways and leads to building a basis for mastery.

AI models that produce code remove the understanding part of coding and in a way do so worse than having to adjust an answer to fit your specific use case. I have always said that its okay to look up how to do something and even encouraged doing so, but I also always say "but it is extremely important that you do not use that answer until you actually understand why it solves the problem". Asking questions and understanding answers is a fundamental skill that needs to be practiced to maintain and these tools cut that process out. Meaning if they are productive now, they will be less so later as the user relies more on the magic answer box and less on their understanding and skill.

It is this reasoning that I also state to my peers that it's okay to generate code, but you must also take the time to review and understand how it works before implementing it. Using these tools "correctly" in that light means an active reduction of productivity since you have to spend that extra effort to gain what you would have by puzzling it out and implementing it yourself.

The technology behind these things *is* very powerful and *does* have value, but not in creative spaces (and I do consider coding to be creative). A creative field requires understanding and technique, neither things generative AI can do, and worse using them actively robs the creator of skills they should be developing.

Quoting: FIGBirdThis means I need to review and verify the results and in many cases writing the code from scratch would just be faster.I am experiencing the same thing in translation. A lot of companies are now paying people to review and edit machine translations rather than come up with their own translations. Supposedly this saves time, and more importantly it pays a lot less. But I have to spend so much time, and be so laser-focused on making sure that the LLM didn’t use false cognates, or slightly misinterpret a phrase, or switch pronouns mid-paragraph, or start using a different word to translate a recurring term, or use the wrong punctuation, or mess up the formatting… It would be so much easier to make my own translation and trust that I obviously would use consistent terminology, obviously not forget what pronoun or punctuation to use, etc., and only need to review my text for typos and stylistic tweaks. The speed gain ends up being negligible, and comes at the price of creativity, originality and doing work I actually enjoy instead of painstakingly fixing the mistakes of an algorithm.

Quoting: FIGBirdIt is this reasoning that I also state to my peers that it's okay to generate code, but you must also take the time to review and understand how it works before implementing it.I will admit I don’t always do that, but I agree it must be done. With ChatGPT and the likes, though, it hasn’t been an issue: the coding answers it gives me never work as-is and only serve to remind me of certain functions or methods that could be useful to solve my problem (or introduce me to them, since I’m only an amateur programmer). Then I go look them up in actual documentation or SO and come up with my own implementation.

People can say close to 100% of companies are/will be using AI for coding, but what they mean is "some kind of clump in my immediate view are all doing it". I don't think anyone actually knows how prevalent or otherwise it really is.Indeed. I'm regularly checking mastodon.gamedev.place, and from what I read there my impression would be the opposite, namely that LLM tools are barely used for coding.

(As far as I am aware, at my workplace we do not use generative AI for anything that goes into our final products. We do use it for placeholder artwork and for writing shell scripts or other tooling that doesn't end up in the games though.)

The speed gain ends up being negligible, and comes at the price of creativity, originality and doing work I actually enjoy instead of painstakingly fixing the mistakes of an algorithm.The ultimate promise of AI is freeing us from the drudgery of work we enjoy so we can focus on tedious editing and error-checking at intern rates.

Quoting: NezchanThis is also something I feel needs to be said more. A lot of the joy I get from coding is creating those logic flows and understanding and growing that skill. I think far too many people lose this problem when they talk about how it improves productivity or whatever. I do not use these things because I don't find value in them.The speed gain ends up being negligible, and comes at the price of creativity, originality and doing work I actually enjoy instead of painstakingly fixing the mistakes of an algorithm.The ultimate promise of AI is freeing us from the drudgery of work we enjoy so we can focus on tedious editing and error-checking at intern rates.

Quoting: SalvatosI do not use genAI at all, so I don't often consider that you end up having to do it anyway. I also don't want it to feel like I put down or shame folks that don't take the time to understand the answers. I do think that it is important, but there is also a time and place for what Tom Scott would call bodging it together. I merely wanted to illustrate that to actually grow and learn and be a real expert requires that step and it should be one you make an effort to take every time.It is this reasoning that I also state to my peers that it's okay to generate code, but you must also take the time to review and understand how it works before implementing it.I will admit I don’t always do that, but I agree it must be done. With ChatGPT and the likes, though, it hasn’t been an issue: the coding answers it gives me never work as-is and only serve to remind me of certain functions or methods that could be useful to solve my problem (or introduce me to them, since I’m only an amateur programmer). Then I go look them up in actual documentation or SO and come up with my own implementation.

I brought up the failures of my maths education as a bit of a proxy as well. What I think schooling did to me was to snuff out my feelings of joy in learning. This is a big reason I do not have a degree and I did not do college. I had to rebuild my love of learning and the joy of exploring new stuff and I did that while learning a craft on my own, so as a result I put a lot of value in the step of understanding as it is a huge part of what I love.

We should start embracing what cannot be stopped anywayThat’s good advice! We should apply it to more parts in our life. Let’s say, for the sake of an argument…

rape?

Quoting: JahimselfThen if AAA can steal from anyone, anyone should be able to steal from Tim Sweeney and major company having the same philosophy. Everyone should be able to use AI and hack every Tim Sweeney computer and engeneer hardware, to use it for different purpose with no label or prior consent.If buying doesn't make you an owner pirating also doesn't make you a robber.

Quoting: NezchanThe assumption of AI replacing jobs was based on the premise that for the majority of population, a sloppy product is acceptable, which I think is unfortunately quite correct and the CEOs know that. Some will be willing to abandon the more demanding part of their clientele if the company ends up with a net gain from cutting down the costs of actual human input and QA of the AI-produced slop. Others will see an opportunity in entering the newly created niche. This will ultimately create a polarized market, not unlike the current distinction between mainstream and luxury goods. The question remains, how many companies will be successful in navigating the middle ground...The speed gain ends up being negligible, and comes at the price of creativity, originality and doing work I actually enjoy instead of painstakingly fixing the mistakes of an algorithm.The ultimate promise of AI is freeing us from the drudgery of work we enjoy so we can focus on tedious editing and error-checking at intern rates.

Quoting: JahimselfThen if AAA can steal from anyone, anyone should be able to steal from Tim Sweeney and major company having the same philosophy.The ultimate irony, companies who were shouting the loudest about software piracy are now the quickest to embrace blatant IP theft where it suits them.

How to setup OpenMW for modern Morrowind on Linux / SteamOS and Steam Deck

How to setup OpenMW for modern Morrowind on Linux / SteamOS and Steam Deck How to install Hollow Knight: Silksong mods on Linux, SteamOS and Steam Deck

How to install Hollow Knight: Silksong mods on Linux, SteamOS and Steam Deck