With more and more game developers creating AI slop, or just using AI generation for stupid things in their games, we need more ways to avoid them.

The problem is for me, I'm so busy that it's just easy to miss. The AI notice on Steam page is buried is small writing at the bottom of a store page. Steam itself has no filters for it currently either, although the community site SteamDB does and the list is a lot bigger than I expected. 12,284 games on Steam have an AI disclosure according to SteamDB at time of writing. I was going to say "that's a crazy number!", but it's not really is it? I'm sure many GamingOnLinux readers fully expected a lot of developers to end up pushing out slop with AI generation.

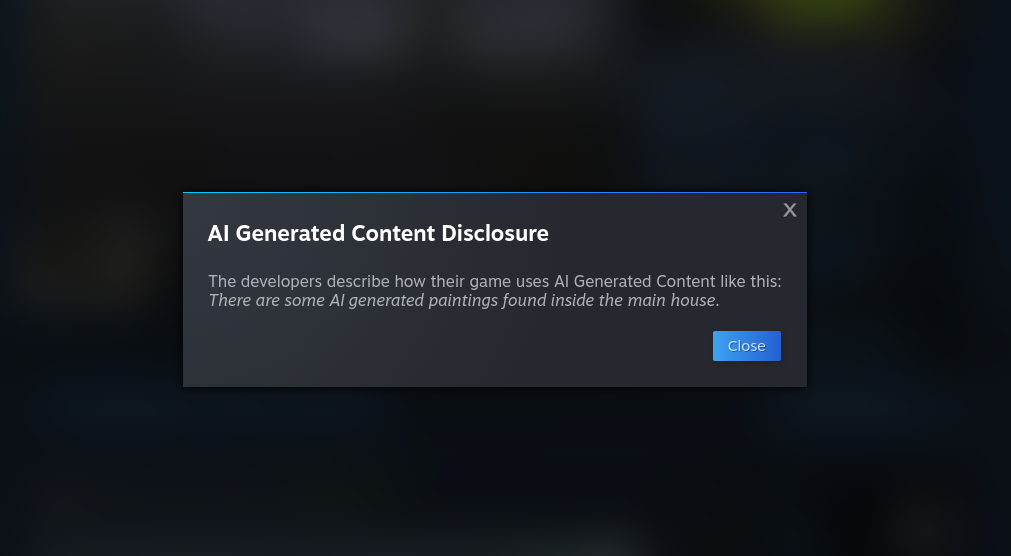

Thankfully, an open source browser userscript was pointed out to me on Bluesky (thanks!) which really makes it impossible to miss. It will work on any operating system with pretty much all major browsers. With that installed, when going to a Steam page that has an AI disclosure, you get this appear before you're able to actually do anything:

I love how simple and most importantly how effective it is. The main thing is that doesn't stop you taking a look, it just makes sure you're fully aware of the AI gen use. Hopefully never again will I completely waste my time playing through a game, writing it up and then suddenly remembering - "Oh yeah, I have to check if they stuck AI generation in it".

I'm sure some readers might find my stance on AI generation a bit harsh perhaps, but when there's tens of thousands of games releasing every year — my time (and yours!) is a premium currency. Why would I want to spend my time with a game full of AI generation, when I could play one that had real actual people craft the work. I can just skip over a game with it for a different game without it. Some of the AI use just seems completely daft as well, one I saw earlier noted how it was used for "Icons + Artists Paintover for 5 icons, out of 100s of artist icons" (Kingdoms Reborn) and in that case I think like…why was it even needed at all?

Using this browser userscript, and remembering to check SteamDB, it's a genuine surprise to see just how many titles have now added an AI disclosure. To my surprise even games like My Summer Car, The Outlast Trials and Siralim Ultimate have the notice.

Hopefully you find it helpful. With tools like the recent EdenSpark announcement, we're going to see more AI games.

Quoting: NezchanThe trouble is, they're conflicted. On the one side is copyright and their ability to squeeze all the money out of intellectual property forever.ah, the old shall i be evil or even more evil thought process.

On the other, they might get to use the technology to replace all the artists, writers, actors, etc. with free robot slaves. Or worse, someone else might get robot slaves first.

It's a tough call.

Last edited by Lofty on 22 Oct 2025 at 12:26 am UTC

I guess if it was used for texture creation it can also be quite good.

People need to remember that games have been doing procedural generation for a long time before this AI craze and it is very similar sort of thing.

Wish we could identify AI slop more accurately rather then just labelling everything that uses AI in some way somewhere being bad, because it won't be long before all games made use it in some form!

Last edited by Gerarderloper on 21 Oct 2025 at 10:42 pm UTC

Quoting: TheRiddickI'm not opposed of AI gen being used for voice acting (when done right and not stealing VA)define stealing. Outright plagiarism is perhaps easy to spot but hard to prove let alone raise an expensive legal challenge.But taking a VA and modifying it is even more deceptive. The voice isn't just an audio signature , it's the soul & passion of the artist.

back to first point .. this is how i see what's happening.

They know there stealing

We know there stealing

They know we know there stealing

But what is a poorly paid struggling artist going to do against an army of billionaire backed lawyers ?

as @Nezchan put it there probably vacillating between litigating for billions themselves or working out how many billions they can save by wiping out large portions of 'useless' humanity using Ai

Last edited by Lofty on 22 Oct 2025 at 7:54 am UTC

Quoting: EriIn The Finals they use it for the voice of the match commentators with mixed results... And most of the time you are too focused or talking to your team mates to pay attention to them.but who's voice signature was it trained from ? would it be possible to ask the studio ? if there is no reply could you use a FOIA request to find out ? what's the law around this , does anybody know ?

What i don't understand is where the lawyers at ?I believe the problem is that buying a piece of media and feeding it into an algorithm to create a model isn't considered copyright infringement because the result is a piece of software rather than a copy of the original piece of media. Yes, if you ask a picture generating model to generate a picture of an archeologist with a hat who uses a bullwhip it generates an image which is easily infringing on a well-known intellectual property, but then it's still not the model which is infringing. Now, machine generation of media is a tool, so creating an infringing text or tune or image isn't a problem per se. If I put a bunch of cans of paint on a shelf and then knock the shelf down and the paint splatters form an image of a familiar Italian plumber with a red hat I haven't done anything wrong. Distributing the result, however, will likely get me into hot water (if the rights holder decides to bother itself with me).

Typing this comment got me thinking, though, this kinda reminds me of software libraries. Machine generating models are basically turning various forms of media into something like software libraries from which pieces can be reused to make something new (in as far as anything can be new when, of course, all original ideas have long been had and we're just rehashing the same stuff over and over again). Back in the day software didn't have licenses and you could freely reuse things from programs made by others but those days came to an end. Maybe we'll see media start getting licensed to not allow machine generation model creation.

I believe the problem is that buying a piece of media and feeding it into an algorithm to create a model isn't considered copyright infringement because the result is a piece of software rather than a copy of the original piece of media.You're probably right, but that just means that copyright law needs to be updated.

If they want to create a new AI that's a great interior designer, but the creators of that AI need to knock doors and be shown the insides of people's houses - let's face it, they'd be told to do one.

The issue here is that they didn't have to knock doors and be let in. They just scraped everything they could find on the internet and considering all that data "free".

Even when it was provably not free, such as when Meta, absolutely scumbags that they are, scraped terrabytes of LibGen for pirated books, to train their shitty model. And then the ball-less judge ruled against the authors. Nothing about them breaking the law by torrenting LibGen, nah, just a weak excuse about "not dilating the market through their actions". Holy fuck, Zuckerberg makes me feel physically sick.

If you're still using Facebook or Insta after this, you have no soul. I can't judge you for Whatsapp because I know from personal experience how difficult it is to get people moved to alternatives, when they just don't care.

https://www.theguardian.com/technology/2025/jun/26/meta-wins-ai-copyright-lawsuit-as-us-judge-rules-against-authors

Remember Kazaa in the early 2000's? Remember that single mother being sued into oblivion because her son shared songs using it??

In Duluth, Minnesota, the recording industry sued Jammie Thomas-Rasset, a 30-year-old single mother. On 5 October 2007, Thomas was ordered to pay the six record companies (Sony BMG, Arista Records LLC, Interscope Records, UMG Recordings Inc., Capitol Records Inc. and Warner Bros. Records Inc.) $9,250 for each of the 24 songs they had focused on in this case.(Where are those guys? She ended up owing over £2M - which was reduced to around £45K after YEARS of retrials because who the fuck thinks that anyone should be fined £2M for downloading/sharing files in 2003. Insane.)

Anyway. Clearly anything on the internet is free now. So that's nice.

Last edited by scaine on 22 Oct 2025 at 1:35 pm UTC

The problem isn't just the lack of oversight, it's the ethics of it. People losing jobs due to AI, all the AI generation being trained on the works of others without their consent.Pretty much every transformative technology destroys jobs and businesses. That's normal. People who feel threatened by said new technology typically turn into haters, thinking they can stop or at least delay it. That has never worked.

Some of the same artists now throwing spite at AI and anyone using it or being even mildly in favor of it, said the exact same thing about Photoshop and why it will kill all art. They're all using it now. And it hasn't killed art. It just made it different.

I wrote a lengthy post a while back why I really don't think that AI will ever replace human art. Or writing. Or coding. But if you guys insist on not buying anything remotely touched by "AI slop", as some of you refer to it, you will soon find yourself unable to buy any games at all anymore. AI will become a tool like Photoshop or a calculator. Everyone will use it at least in some capacity, because the alternative is losing your job or business. You think you can avoid games using AI even now? Think again. I am not aware of any professional software developer not using AI at least for some mundane tasks already. Maybe some are still out there, who knows. But fewer every day. Chances are that the vast majority of games already have at least some AI generated code in them. Just because you can't see it and its less obvious than art doesn't mean it isn't there. And soon you will be unable to identify AI art assets by counting fingers of characters on the images, too. It's hard to enforce "have to declare AI" rules, if not even experts will be able to tell the difference. And we're about 2-3 years away from that.

Technologies always had that tendency of not letting people stop them by hating them or people using them. The more constructive approach would be to talk about how to compensate creators for their works being used for training (just put a tax on the providers of commercial/non-open source models, really). Because in the end it doesn't matter what made a game as long as it's fun to play.

AI will become a tool like Photoshop or a calculator.I suspect a lot of what you're saying has the potential to be true. But the reason people quite reasonably despise AI in particular, and definitely don't hate Photoshop or calculators, is that those products aren't destroying the planet.

Also, they're rarely forced on you. There are alternatives to these things (that doesn't quite work with calculators as an example, but hey ho - spreadsheets, or abacuses. Okay!) and so these things have to provide real, measurable value before you buy them.

Finally, they didn't absolutely piss on existing creators by stealing their work (and hence livelihood) in order to exist.

I sincerely hope the article below is spot on, and that AI is absolutely unsustainable in the medium or long term. The only reason it exists today is because big tech is front running the costs in the hope to establish dependency, so that when they price this tech according to its actual cost, there are enough subscribers unwilling to do without the tech, and they'll pay up.

https://www.wheresyoured.at/costs/

Fingers crossed.

Last edited by scaine on 22 Oct 2025 at 6:47 pm UTC

Like for instance, shitty game devs that currently use free (or almost free) ai to generate background art like pictures on the walls or something in an rpg ... that cost of generating those will suddenly be REALLY high. It will then once again go back to the artists to create those background art on the walls. Like it should have all along damnit.

also side note: Ed is so great. His podcast is worth a listen as well.

Quoting: KimyrielleBecause in the end it doesn't matter what made a game as long as it's fun to play.

It does to some people. Whether they have a choice or not ? Well, being informed about the inclusion of Ai at least for now will help people make their own ethical choices.

Pretty much every transformative technology destroys jobs and businesses. That's normal. People who feel threatened by said new technology typically turn into haters, thinking they can stop or at least delay it. That has never worked.it may not have worked to completely stop a technology (and that's not really what most people are concerned about), however societal outcry from the public & general activism has enabled legislators to protect it's citizens from the worst impacts of said technology, or at the very least install guard rails.

This carte blanche attitude of "suck it up, it's here deal with it" , "you are obsolete" and then "your a hater " if you don't agree, is a kind of thinking that leads a very bad political climate. When there is no informed consent.

It does to some people. Whether they have a choice or not ? Well, being informed about the inclusion of Ai at least for now will help people make their own ethical choices.Fair enough. Everyone has their own standards of what they accept and don't. Some people think I am strange, because I boycott games forcing a male character on me, and will continue to do so until the day when there is a halfway equal amount of games with female protagonists out there. I guess there are not a lot of people like me around, because these game still seem to sell very well. :D

that cost of generating those will suddenly be REALLY highI skimmed that article quick, and while the numbers seem correct, that's because US/Western-made AI models all seemed to have been developed as if resources don't matter at all. Chinese-made models are taking over, among things because they can be trained and run for a fraction of that cost. The top 5 LLMs are all Chinese-made these days and most of them use a fraction of the parameters the Western models use (to the degree you can run them on machines easily within reach even for smaller companies, no AWS needed). Open AI and Anthropic are basically dead in the water, and unless they pull something really amazing out of the hat near year, they will be out of business.

In the end, there is absolutely nothing about that article that made me agree with the "haha, it's going to blow up!" prediction. People will realize that just throwing more computing power at the problem isn't sustainable and develop models cheaper to train and operate. Like China already did.

Quoting: KimyrielleThe top 5 LLMs are all Chinese-made these days and most of them use a fraction of the parameters the Western models use (to the degree you can run them on machines easily within reach even for smaller companies, no AWS needed).interesting.. & Compounding that, in the west we

Would that matter in terms of digital warfare, propaganda ? Could we see a restriction on china Ai too ?

Genuinely asking as i have no idea how the chips would fall.

Some of the same artists now throwing spite at AI and anyone using it or being even mildly in favor of it, said the exact same thing about Photoshop and why it will kill all art.I don't think they did, though? There were some people who were upset about art done on software, but they were different people with different concerns. Many of the people upset about AI are generally quite pro-technology.

I think it's also worth noting that in the past, some of the concerns about new technologies have been justified--just because a new technology won, does not mean that its overall impact was positive. It just means somebody made money from it. Success and beneficial impact are two different topics.

You can go back, for instance, to the water mill. Water mills were often imposed by feudal lords. Water mills ground flour more efficiently than hand mills, they harnessed the power of water to replace human muscle power, but that's not the main reason feudal lords liked them. Feudal lords liked them because they were a central point peasants had to bring their grain, where the lord could tax it. In order to force peasants to use this central point, feudal lords actively outlawed hand mills to stop peasants from grinding their own grain at home and not paying tax. Clearly the peasants would have preferred to use the less-efficient hand mills and not pay the lord, or there would have been no need to ban them. By the time the feudal period ended, hand mills were largely obsolete; the water mill had won the technological battle, which was really a political battle. But that wasn't actually a good thing for the peasants.

Generative AI is also a very political technology, and if it succeeds (which does not look like happening, at least in the current iteration) any benefits will flow overwhelmingly to uber-wealthy oligarchs. Some other benefits will go to people who, like me, can't do art, or who, like some other people, can't write their own essays. But costs will go to a lot more people than benefit and will be much more serious. A lot of the objections to LLM AI are essentially political in nature, and I think anyone should think two or three times before choosing to be on the oligarch side of those politics. Most other AI criticisms are about an observed dumbing down of what's on the internet from AI "slop", which does seem to be a very real phenomenon.

Photoshop was not a very political technology; it did not really shift the category of people who were doing art or how much money they made from it; to the extent that it made artists or graphic designers more productive, that mainly led to a "speed-up" in what was expected of artist production, and thus an inflation in how much art assets could be produced for any given project. Objections to it were mainly about artistic sensibilities, about the "feel" as it were of doing art, about the general idea of heightening and computer-orienting the technology around creating art. It was the kind of stuff we call "Luddism" even though it quite specifically isn't--Luddism has come to be associated with the idea of romantic, instinctive dislike of technology, but actual Luddism was about politics and the very real fact that industry was turning independent craftspeople who made decent livings into impoverished factory workers who worked 12 hour days in incredibly bad conditions. I do have some sympathy, to a point, for general appeals for less technology in our lives, but it's a quite different argument.

Just because people A didn't like technology A, and people B didn't like technology B that had applications in the same field, doesn't mean people A had the same concerns as people B, let alone that they were the same people.

Last edited by Purple Library Guy on 22 Oct 2025 at 8:38 pm UTC

Quoting: Purple Library GuysnipGreat post.

Do you want me to generate a microphone for you to drop...

Last edited by Lofty on 23 Oct 2025 at 9:51 am UTC

Some people think I am strange, because I boycott games forcing a male character on me, and will continue to do so until the day when there is a halfway equal amount of games with female protagonists out there. I guess there are not a lot of people like me around, because these game still seem to sell very well. :D29% of male players and 76% of female players prefer playing as a female character.

https://quanticfoundry.com/2021/08/05/character-gender/

How to setup OpenMW for modern Morrowind on Linux / SteamOS and Steam Deck

How to setup OpenMW for modern Morrowind on Linux / SteamOS and Steam Deck How to install Hollow Knight: Silksong mods on Linux, SteamOS and Steam Deck

How to install Hollow Knight: Silksong mods on Linux, SteamOS and Steam Deck