NVIDIA has revealed the first details of their third generation of RTX GPUs with Ada Lovelace, plus DLSS 3 is coming with big improvements too.

Models announced so far includes:

- RTX 4090 - $1,599 (£1,679) - 24GB GDDR6X - 450W - October 12th

- RTX 4080 - $1,199 (£1,269) - 16gb GDDR6X - 320W or 12GB GDDR6X - 285W at $899 (£949) - (November sometime)

Click pictures to enlarge:

Some other features mentioned:

- Streaming multiprocessors with up to 83 teraflops of shader power — 2x over the previous generation.

- Third-generation RT Cores with up to 191 effective ray-tracing teraflops — 2.8x over the previous generation.

- Fourth-generation Tensor Cores with up to 1.32 Tensor petaflops — 5x over the previous generation using FP8 acceleration.

- Shader Execution Reordering (SER) that improves execution efficiency by rescheduling shading workloads on the fly to better utilize the GPU’s resources. As significant an innovation as out-of-order execution was for CPUs, SER improves ray-tracing performance up to 3x and in-game frame rates by up to 25%.

- Ada Optical Flow Accelerator with 2x faster performance allows DLSS 3 to predict movement in a scene, enabling the neural network to boost frame rates while maintaining image quality.

- Architectural improvements tightly coupled with custom TSMC 4N process technology results in an up to 2x leap in power efficiency.

- Dual NVIDIA Encoders (NVENC) cut export times by up to half and feature AV1 support. The NVENC AV1 encode is being adopted by OBS, Blackmagic Design DaVinci Resolve, Discord and more.

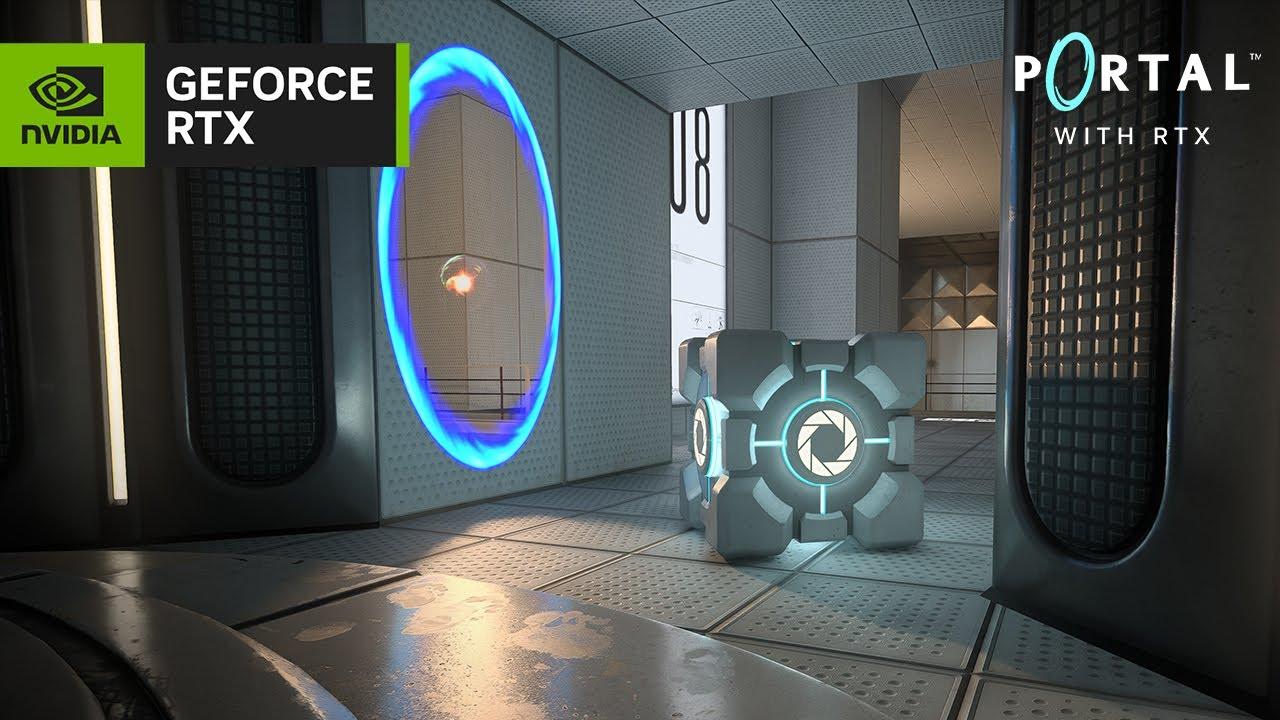

During the event they showed off Cyberpunk 2077 and Microsoft Flight Simulator running with DLSS3 and the performance uplift did seem pretty impressive. Oh, and Portal RTX is a thing coming as a free DLC in November.

Direct Link

"DLSS is one of our best inventions and has made real-time ray tracing possible. DLSS 3 is another quantum leap for gamers and creators," said Jensen Huang, founder and CEO of NVIDIA. "Our pioneering work in RTX neural rendering has opened a new universe of possibilities where AI plays a central role in the creation of virtual worlds."

DLSS3 will release with Ada Lovelace on October 12th and these games / engines will support it:

|

|

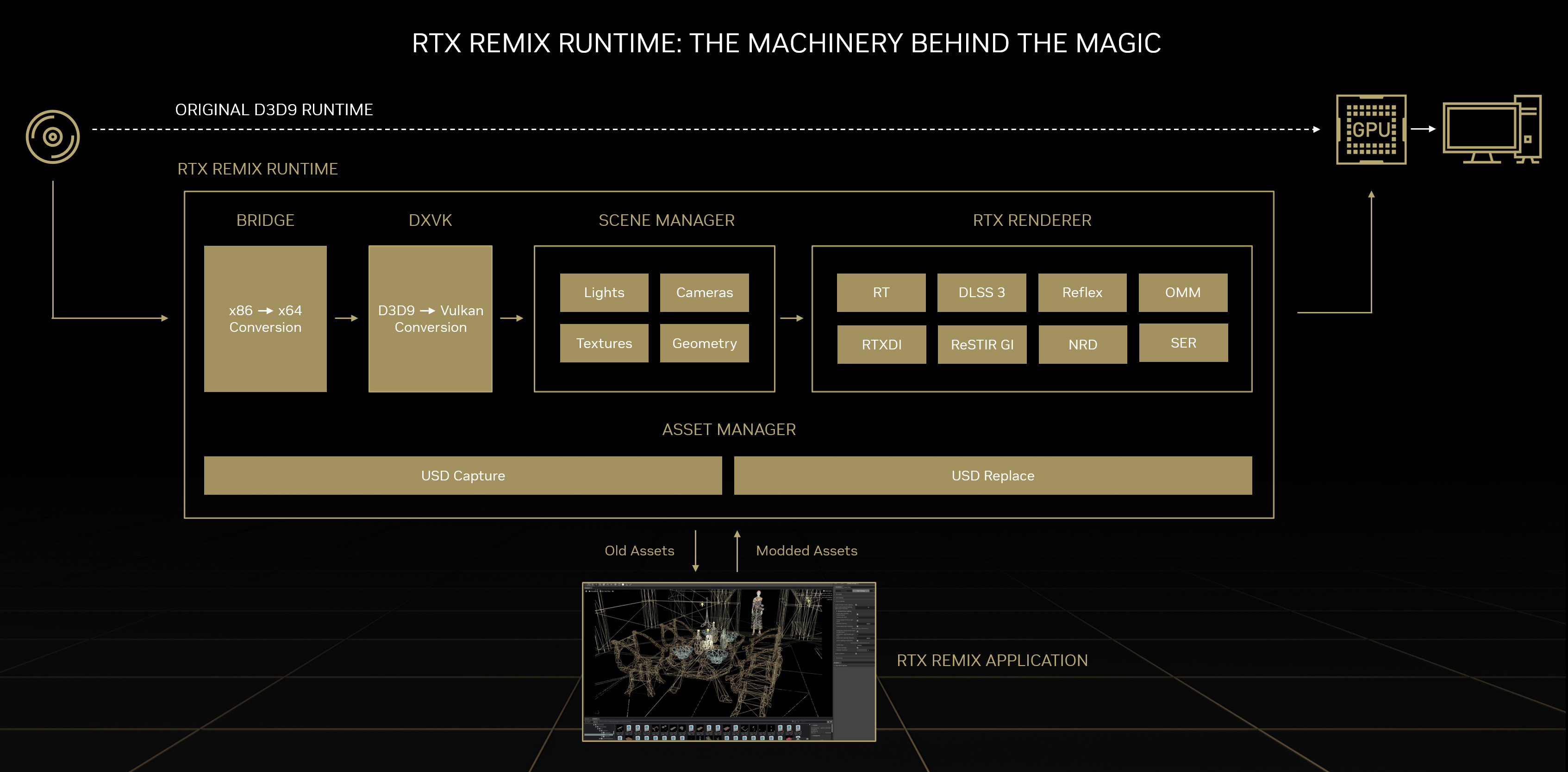

Oh, they also announced RTX Remix, a free modding platform built in their NVIDIA Omniverse that they say allows people to make RTX mods for various games that include "enhanced materials, full ray tracing, NVIDIA DLSS 3, and NVIDIA Reflex". It will support DirectX 8 and DirectX 9 games with fixed function graphics pipelines.

Those with a keen eye might spot a familiar bit of open source tech being used for it too:

DXVK for those who don't quite get it. The same translation tech used in Proton to get Windows games to run with Vulkan. So all mods made with it will run with Vulkan!

DXVK for those who don't quite get it. The same translation tech used in Proton to get Windows games to run with Vulkan. So all mods made with it will run with Vulkan!

See the full video below:

Direct Link

In other related NVIDIA GPU news, recently EVGA has broken off from NVIDIA and will no longer do their GPUs. In a brief announcement on their official forum they posted:

Hi all,

You may have heard some news regarding the next generation products from EVGA. Please see below for a message on future products and support:EVGA is committed to our customers and will continue to offer sales and support on the current lineup. Also, EVGA would like to say thank you to our great community for the many years of support and enthusiasm for EVGA graphics cards.

- EVGA will not carry the next generation graphics cards.

- EVGA will continue to support the existing current generation products.

- EVGA will continue to provide the current generation products.

EVGA Management

I will wait and see what AMD will do.

Quoting: iskaputtAnd here is me, contemplating when to get a new card in the ~200 watts, 400-500 Euros region.

My absolute limit for a PC in total watts is around 275 - 300w, when you factor in a couple of monitors. Summers without AC means i barely get to play a game for a long period of time before the temperature creeps up especially in humid weather. I suppose it depends on the building and room size, but for me i can't imagine doubling that ! let alone the energy costs.

Steam deck 2.0 FTW i think that will be a goto for lots of peoples summer gaming, you can sit outside in the shade and still game. Things really are changing in the PC gaming world and you would think given the success of Nintendo switch powered by nvidia, they would of have released/teased a Steam deck equivalent by now instead of focusing on the wealthiest gamers and industrial customers.

Quoting: redneckdrowToo little too late, in my case. I'm extremely happy with my new RX 6600!

Mine is arriving tomorrow and it'll be the first time I've not had an NVidia GPU in my desktop.

After the eVGA announcement I decided I'm done with NVidia and bought this card.

It would be really nice if eVGA decided to manufacture AMD GPUs and that other NVidia manufactures move away from NVidia and start making AMD and Intel GPUs instead.

Quoting: GuestYou know why they implement RTX for those closed-space games or two decade old games? Because perfomance hit won't be as big as doing it for open world games with dynamic daynight cycle, where raytracing would help the most to provide GI or at least global occlusion.Nothing to do with day/night cycles. They do look great with ray tracing. See Q2RTX, for example. It's because open areas need more rays in order to adequately hit every surface. Just geometry.

Quoting: cookiEoverdoseHaha, every time - "quantum leap", they don't know what that actually means.

Yup every time I see a company describe something as a 'quantum leap' I feel compelled to point out to everyone that the definition of quantum is literally 'the smallest amount or unit of something'.

Which means what they're saying is that DLSS 3 is the smallest possible improvement they could possibly make over DLSS 2? Did they fix a typo and increase the number?

Quoting: GuestQuoting: CatKillerIf there is no daynight cycle, then baking light and reflection data is possible, and there is not much need in realtime raytracing, that's why I mentioned it in the first place.Quoting: GuestYou know why they implement RTX for those closed-space games or two decade old games? Because perfomance hit won't be as big as doing it for open world games with dynamic daynight cycle, where raytracing would help the most to provide GI or at least global occlusion.Nothing to do with day/night cycles. They do look great with ray tracing. See Q2RTX, for example. It's because open areas need more rays in order to adequately hit every surface. Just geometry.

Exactly.

It's easy to take these old games from a decade or more ago, with their relatively simple lighting engines, and throw some raytracing on them for a noticeable difference. You could make a game like Portal look almost as good as the raytraced result by simply updating the game engine to a more recent version, increasing the resolution of the baked lightmaps, placing more light probes, and using more recent real time approximations of GI, such as screenspace GI, using more real time light sources with shadow mapping enabled, etc.

In traditional boxy shaped level designs of old first person games, like Doom for example, you could easily setup a lightmap, some light probes, reflection probes with parallax projection shapes, bake the result, and the outcome is going to look so close to the raytraced result, that in many cases the raytrace result is simply not worth it.

Even outdoor scenes, if the lighting is static, such as a counter strike or half life level for example, the results of baked lighting can look fantastic. And this is how most game engines have worked for a long time and how most engines still work today.

This is exactly what I do in Blender, when I setup a scene to be rendered in Eevee instead of Cycles, I setup and bake lighting for a scene, bake reflection probes, etc, and the results I can get from a 2 second Eevee render are almost as good as 5 minute renders from Cycles, which is a full blown pathtracer.

The only issue is if there are dynamic changes in lighting, such as a dynamic day/night cycle, but even in those situations there are options, such as baking all the non-sky based lighting separately from the sky, and having different baked versions of the sky lighting if the day/night cycle is always the same with no weather variations, or other trickery to still fall back to baked results.

And sometimes, really, the difference is just not noticeable even if the baked result is sometimes 'wrong', if it's close enough most people would not be able to tell you if it is wrong or not by simply looking at it. They'd need a side by side comparison with the 'ground truth' raytraced result to know for sure where or how it's wrong.

There are more modern solutions like Godot's SDFGI which is real time too.

And if you mix those kinds of algorithms with something like screenspace raymarching for global illumination, which basically takes that approximated or baked result and 'corrects' it where possible with any information available in the screenspace, the result is almost perfect and for a fraction of the performance cost of raytracing.

Why use 10x the processing power to compute a result that looks barely any different to an approximated result?

There's a lot of ways in which real time raytracing doesn't make sense...

The places where real time raytracing makes the most sense, are in situations where the lighting setup is so dynamic there is basically no room for baking. But there aren't many games which tick that box, survival building/crafting games mostly, or games like No Mans Sky, where the 'game world' is procedural and huge, so baking lighting is just out of the question.

Last edited by gradyvuckovic on 21 September 2022 at 1:35 am UTC

https://www.videocardbenchmark.net/gpu_value.html

I asked Phoronix about doing something similar (with Linux benchmarks, of course!), but I think he didn't want to get into tracking pricing (although it would probably help his funding via affiliate links).

See more from me