The Godot Engine team recently posted about more issues with "AI slop", including various pull requests that have become a big drain on resources.

You've likely seen other projects talking about this across the net, because AI agents and people who use various generative AI tools are generating code and submitting it to lots of projects to pump up their numbers - often while having no clue what the code does and not even testing it. This is becoming a bigger problem as time goes on.

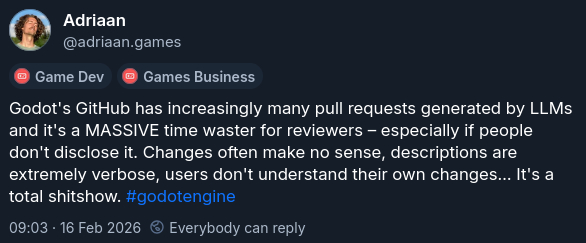

Writing on Bluesky a few days ago, the Director at game dev studio Hidden Folks wrote:

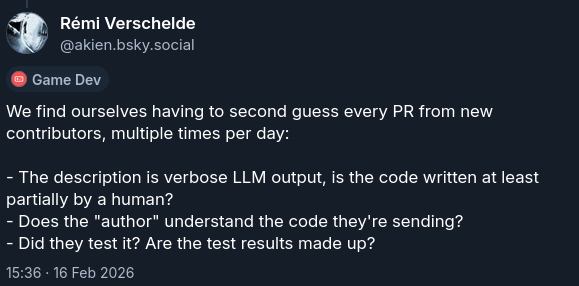

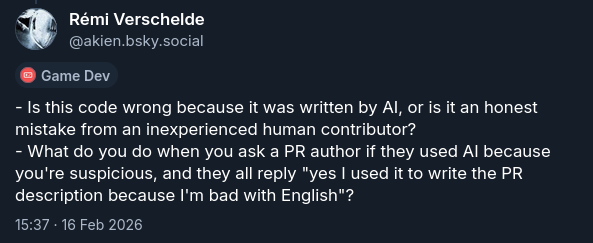

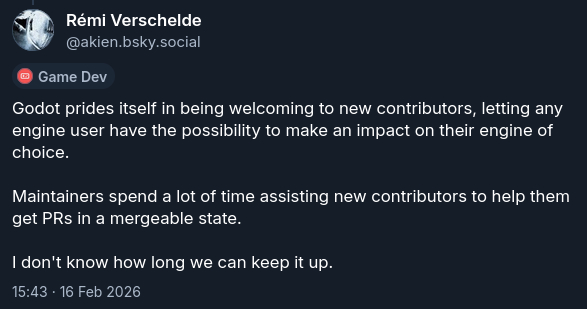

This led to a Bluesky thread from Godot Project Maintainer Rémi Verschelde, that mentions how "draining and demoralizing" it has become due to the influx of it and how they end up second guessing "every PR from new contributors".

There seems to be no easy answer here, someone or something somehow has to filter through the increasing noise of both people and bots just shovelling out AI generated code to various projects like Godot. One solution that Verschelde mentioned being suggested is using AI to fight AI which they said "seems horribly ironic" but they might have to eventually.

Even GitHub themselves are aware of the problematic situation, with a post from the GitHub Project Manager Camilla Moraes a few weeks ago saying they're exploring solutions to "the increasing volume of low-quality contributions that is creating significant operational challenges for maintainers".

Find out how you can help fund Godot on their funding page.

Quoting: dpanterBan AI-generated everything.Amen

Quoting: dpanterBan AI-generated everything. Is it optimal? No, but practical and likely the only reasonable option if Godot wants to remain open to new contributors. These AI slop "contributions" aren't contributing to anything positive.I'm tempted to agree, but this is both impractical and would throw the small percentage of valid cases.

A better approach would to be require all PR to be hand-reviewed *before* submission, and vetted by a human. It's equally impractical, but if we look at the cURL project as an example, it is possible for competent devs to use LLM and similar as tools to raise appropriate issues, fixes, and formulate them properly. Key element is, a human used a tool vs. a tool used a human.

I think it's perfectly reasonable to ask people to understand what it is they are submitting, because they are asking me to maintain it going forward.

Personally I feel that LLM's as how they are pushed to have superficial value. I honestly feel that if it never happened the whole world would be in a better place and as a society we would be more productive.

Not that the tech is bad, just the politics of the tech.

😡

Quoting: Cley_FayeBan is simple and simple things are practical.Quoting: dpanterBan AI-generated everything. Is it optimal? No, but practical and likely the only reasonable option if Godot wants to remain open to new contributors. These AI slop "contributions" aren't contributing to anything positive.I'm tempted to agree, but this is both impractical and would throw the small percentage of valid cases.

So we need to rid or not put in place some systems, because it (may) hurts small percentage... I agree, first come to mind... vaccination... is both impractical and hurts percentages /s

using AI to fight AI which they said "seems horribly ironic" but they might have to eventuallyA couple of years ago, I was on a cyber security panel which asked "what is the best use for AI, in your business". We were a panel of investment managers, and the top answer was "writing out responses to client due diligence questionnaires". The second-to-top answer was "reading responses from our own vendor due diligence questionnaires".

You couldn't make it up.

But no, fighting AI with more AI is a poor choice that simply feeds the beast (in this case, the beast being AI itself). A ban on AI is ideal... except it will become increasingly difficult to know when AI has been involved.

I wonder if it's possible that new PR requests (that is, PRs from new contributors) go into a queue, and a voting system is introduced among Github users. This wouldn't be the Godot team themselves, just interested parties. As more people vote on the "good" PRs, or at least the desirable PRs, they rise to the top, and only then get reviewed by the Godot team. Once they've contributed (well) once, they skip the queue for future PRs.

It's far from simple, but there's a crowd of interested parties out there, and it would be a shame not to give that crowd some agency on the prioritisation of new contributions.

that would limit how much the "ai bros" can flood the repo with bad submissions, and help pay the costs of reviewing the submissions

https://theshamblog.com/an-ai-agent-published-a-hit-piece-on-me/

Oh brave new world, that has such LLMs in it!

Quoting: whizseHow matplotlib's attempt at moderating AI submissions went:I heard about this the other day, because Ars Technica then ran (and [removed](https://arstechnica.com/staff/2026/02/editors-note-retraction-of-article-containing-fabricated-quotations/) ) an article, which used AI which entirely made up quotes about it all.

https://theshamblog.com/an-ai-agent-published-a-hit-piece-on-me/

Oh brave new world, that has such LLMs in it!

Last edited by Liam Dawe on 18 Feb 2026 at 2:42 pm UTC

Quoting: Cley_FayeA better approach would to be require all PR to be hand-reviewed *before* submission, and vetted by a human.Sure.

Unfortunately not a feasible option in this dire situation.

Stemming the tide as soon as possible is imperative for Godots survival.

Quoting: Cley_FayeA small percentage of false positives is better than the several times of increased effort spent on reviewing.Quoting: dpanterBan AI-generated everything. Is it optimal? No, but practical and likely the only reasonable option if Godot wants to remain open to new contributors. These AI slop "contributions" aren't contributing to anything positive.I'm tempted to agree, but this is both impractical and would throw the small percentage of valid cases.

A better approach would to be require all PR to be hand-reviewed *before* submission, and vetted by a human. It's equally impractical, but if we look at the cURL project as an example, it is possible for competent devs to use LLM and similar as tools to raise appropriate issues, fixes, and formulate them properly. Key element is, a human used a tool vs. a tool used a human.

Often the best rule is not so much "is it LLM-generated" but rather "does it look LLM-generated", because if you reviewed and cleaned-up your partially-LLM-generated submission well, it shouldn't at all be "obvious" that it was LLM-generated.

Last edited by coolitic on 18 Feb 2026 at 3:35 pm UTC

Quoting: dpanterBan AI-generated everything. Is it optimal? No, but practical and likely the only reasonable option if Godot wants to remain open to new contributors. These AI slop "contributions" aren't contributing to anything positive.Your "solution" is naive at best, because you wrongly assume that people would care about such a ban any more than they care about speed limits. And in contrast to what you're probably assuming, it's often not very obvious that code was AI generated, particularly if some telltale signs are removed (e.g. overly verbose comments that LLMs love so much).

Also, legitimate developers use AI tools too, so you'd technically ban a lot of solid code contributions because their authors included a vibecoded function or two. Not sure if that's the intended effect, but hey.

Quoting: whizseHow matplotlib's attempt at moderating AI submissions went:Thank you for sharing, that was a hell of a read.

https://theshamblog.com/an-ai-agent-published-a-hit-piece-on-me/

Oh brave new world, that has such LLMs in it!

Quoting: Kimyrielleyou wrongly assumeI find your assumptions about my assumptions presumptuous.

Don't give me that "AI code can be good too" nonsense, that's not what is happening here. Legitimate developers wouldn't need to vibecode slop, nor PR untested/unknown code willy-nilly.

'Banning

Quoting: dpanterWell, if that's the best you can do to defend your knee-jerk "solution" to the problem, I am glad you don't have any say in the matter, so cooler heads than yours can look for one. *shrug*Quoting: Kimyrielleyou wrongly assumeI find your assumptions about my assumptions presumptuous.

Don't give me that "AI code can be good too" nonsense, that's not what is happening here. Legitimate developers wouldn't need to vibecode slop, nor PR untested/unknown code willy-nilly.

'Banninga lot of solidcode contributions because their authors included a vibecoded function or two' sounds absolutely reasonable in this catastrophic AI slop onslaught on Godot.

Quoting: dpanterI haven't got the faintest idea how you would want to find out.Quoting: Kimyrielleyou wrongly assumeI find your assumptions about my assumptions presumptuous.

Don't give me that "AI code can be good too" nonsense, that's not what is happening here. Legitimate developers wouldn't need to vibecode slop, nor PR untested/unknown code willy-nilly.

'Banninga lot of solidcode contributions because their authors included a vibecoded function or two' sounds absolutely reasonable in this catastrophic AI slop onslaught on Godot.

How to setup OpenMW for modern Morrowind on Linux / SteamOS and Steam Deck

How to setup OpenMW for modern Morrowind on Linux / SteamOS and Steam Deck How to install Hollow Knight: Silksong mods on Linux, SteamOS and Steam Deck

How to install Hollow Knight: Silksong mods on Linux, SteamOS and Steam Deck